[ECCV20] LEMMA: A Multi-view Dataset for Learning Multi-agent Multi-task Activities

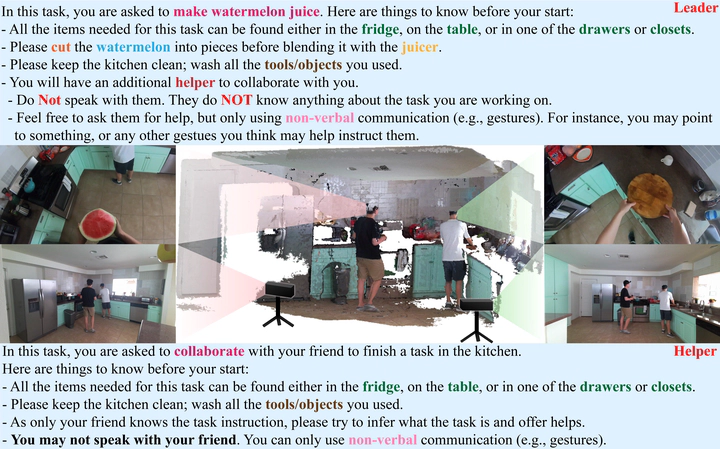

An exemplar task instruction of making juice for two agents in a Multi-agent Single-task ($2 \times 1$) scenario. Middle: Point clouds, TPVs, and FPVs.

An exemplar task instruction of making juice for two agents in a Multi-agent Single-task ($2 \times 1$) scenario. Middle: Point clouds, TPVs, and FPVs.Abstract

Understanding and interpreting human actions is a long-standing challenge and a critical indicator of perception in artificial intelligence. However, a few imperative components of daily human activities are largely missed in prior literature, including the goal-directed actions, concurrent multi-tasks, and collaborations among multi-agents. We introduce the LEMMA dataset to provide a single home to address these missing dimensions with meticulously designed settings, wherein the number of tasks and agents varies to highlight different learning objectives. We densely annotate the atomic-actions with human-object interactions to provide ground-truths of the compositionality, scheduling, and assignment of daily activities. We further devise challenging compositional action recognition and action/task anticipation benchmarks with baseline models to measure the capability of compositional action understanding and temporal reasoning. We hope this effort would drive the machine vision community to examine goal-directed human activities and further study the task scheduling and assignment in the real world.