[ICCV23] Full-Body Articulated Human-Object Interaction

Abstract

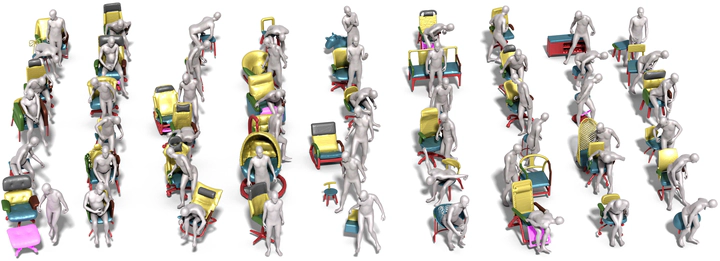

Fine-grained capture of 3D Human-Object Interactions (HOIs) enhances human activity comprehension and supports various downstream visual tasks. However, previous models often assume that humans interact with rigid objects using only a few body parts, constraining their applicability. In this paper, we address the intricate challenge of Full-Body Articulated Human-Object Interaction (f-AHOI), where complete human bodies interact with articulated objects having interconnected movable joints. We introduce CHAIRS, an extensive motion-captured f-AHOI dataset comprising 17.3 hours of diverse interactions involving 46 participants and 81 articulated as well as rigid sittable objects. The CHAIRS provides 3D meshes of both humans and articulated objects throughout the interactive sequences, offering realistic and physically plausible full-body interactions. We demonstrate the utility of CHAIRS through object pose estimation. Leveraging the geometric relationships inherent in HOI, we propose a pioneering model that employs human pose estimation to address articulated object pose and shape estimation within whole-body interactions. Given an image and an estimated human pose, our model reconstructs the object’s pose and shape, refining the reconstruction based on a learned interaction prior. Across two evaluation scenarios, our model significantly outperforms baseline methods. Additionally, we showcase the significance of CHAIRS in a downstream task involving human pose generation conditioned on interacting with articulated objects. We anticipate that the availability of CHAIRS will advance the community’s understanding of finer-grained interactions.